Comparing Load Balancing Algorithms

In comparing load balancing algorithms, Round Robin distributes requests cyclically and suits servers with identical specs. Weighted Round Robin factors in server capacity, assigning more requests to higher-capacity servers. Least Connections directs new connections to servers with fewer active connections, while Weighted Least Connections combines server capacity with current connections for distribution. Random evenly distributes requests in clusters with similar configurations.

Overview

So your load balancer supports multiple load balancing algorithms, but you don't know which one to pick? You will in a minute.

In this post, we compare 5 common load-balancing algorithms, highlighting their main characteristics and pointing out where they're best and not well suited. Let's begin.

Prefer to watch a video version of this post instead? Click or tap to play.

Round Robin

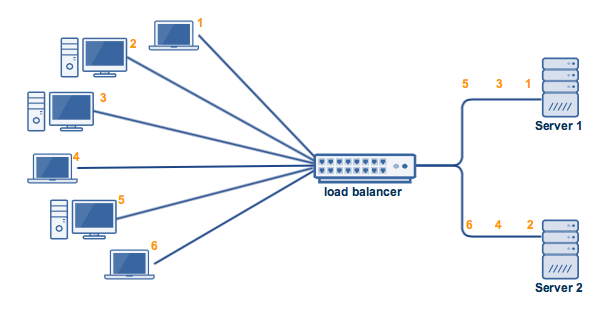

Round Robin is undoubtedly the most widely used algorithm. It's easy to implement and easy to understand. Here's how it works. Let's say you have 2 servers waiting for requests behind your load balancer. Once the first request arrives, the load balancer will forward that request to the 1st server. When the 2nd request arrives (presumably from a different client), that request will then be forwarded to the 2nd server.

Because the 2nd server is the last in this cluster, the next request (i.e., the 3rd) will be forwarded back to the 1st server, the 4th request back to the 2nd server, and so on, in a cyclical fashion.

As you can see, the method is very simple. However, it won't do well in certain scenarios.

For example, what if Server 1 had more CPU, RAM, and other specs than Server 2? Server 1 should be able to handle a higher workload than Server 2, right?

Unfortunately, a load balancer running on a Round Robin algorithm won't be able to treat the two servers accordingly. In spite of the two servers' disproportionate capacities, the load balancer will still distribute requests equally. As a result, Server 2 can get overloaded faster and probably even go down. You wouldn't want that to happen.

The Round Robin algorithm is best for clusters consisting of servers with identical specs. For other situations, you might want to look at other algorithms, like the ones below.

Weighted Round Robin

For the 2nd scenario mentioned above, i.e., Server 1 has higher specs than Server 2, you might prefer an algorithm that assigns more requests to the server with a higher capability of handling greater load. One such algorithm is the Weighted Round Robin.

The Weighted Round Robin is similar to the Round Robin in that the way requests are assigned to the nodes is still cyclical, albeit with a twist. The node with the higher specs will be apportioned a greater number of requests.

But how would the load balancer know which node has a higher capacity? Simple. You tell it beforehand. Basically, when you set up the load balancer, you assign "weights" to each node. The node with the higher specs should, of course, be given the higher weight.

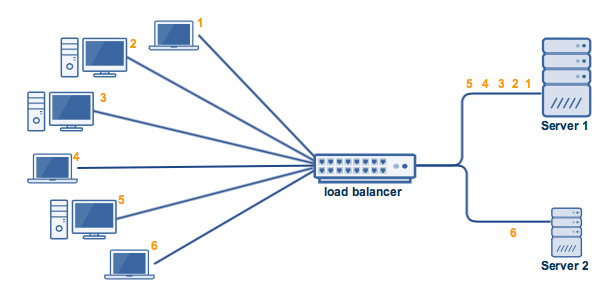

You usually specify weights in proportion to actual capacities. So, for example, if Server 1's capacity is 5x more than Server 2's, then you can assign it a weight of 5 and Server 2 a weight of 1.

So when clients start coming in, the first 5 will be assigned to node 1 and the 6th to node 2. If more clients come in, the same sequence will be followed. The 7th, 8th, 9th, 10, and 11th will all go to Server1, and the 12th to Server 2, and so on.

Capacity isn't the only basis for choosing the Weighted Round Robin (WRR) algorithm. Sometimes, you'll want to use it if, say, you want one server to get a substantially lower number of connections than an equally capable server for the reason that the first server is running business-critical applications and you don't want it to be easily overloaded.

Least Connections

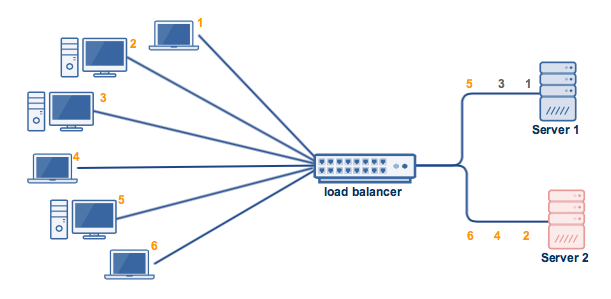

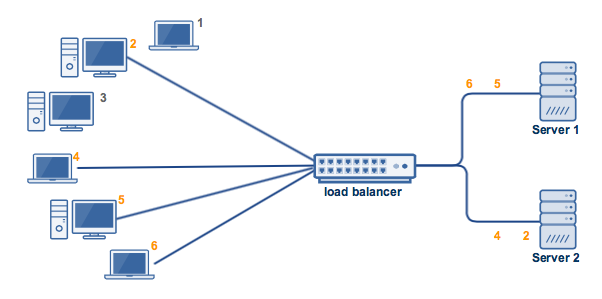

There can be instances when, even if two servers in a cluster have exactly the same specs (see first example/figure), one server can still get overloaded considerably faster than the other. One possible reason would be that clients connecting to Server 2 stay connected much longer than those connecting to Server 1.

This can cause the total current connections in Server 2 to pile up, while those of Server 1 (with clients connecting and disconnecting over shorter times) would virtually remain the same. As a result, Server 2's resources can run out faster. This is depicted below, wherein clients 1 and 3 already disconnect, while 2, 4, 5, and 6 are still connected.

The Least Connections algorithm would be a better fit in situations like this. This algorithm takes into consideration the number of current connections each server has. When a client attempts to connect, the load balancer will try to determine which server has the least number of connections and then assign the new connection to that server.

So if say (continuing our last example), client 6 attempts to connect after 1 and 3 have already disconnected but 2 and 4 are still connected, the load balancer will assign client 6 to Server 1 instead of Server 2.

Weighted Least Connections

The Weighted Least Connections algorithm does to Least Connections what Weighted Round Robin does to Round Robin. That is, it introduces a "weight" component based on the respective capacities of each server. Like in the Weighted Round Robin, you must specify each server's "weight" beforehand.

A load balancer that implements the Weighted Least Connections algorithm now considers the weights/capacities of each server AND the current number of clients currently connected to each server.

Random

As its name implies, this algorithm matches clients and servers randomly, i.e., using an underlying random number generator. In cases wherein the load balancer receives many requests, a Random algorithm will be able to distribute the requests evenly to the nodes. So like Round Robin, the Random algorithm is sufficient for clusters consisting of nodes with similar configurations (CPU, RAM, etc).

Curious to see these algorithms in action and discover which one best suits your infrastructure? Book a demo with JSCAPE MFT Gateway today and take the first step toward optimizing your network's load balancing. [Book Your Demo Now]

Articles related to this

Active-Active vs Active-Passive High Availability Cluster

Configuring A High Availability Cluster for Various TCP/UDP Services

Get Started

JSCAPE MFT Gateway is a load balancer and reverse proxy that supports all 5 load balancing algorithms. If you want to try it out, you may download a free, fully functional evaluation edition now. Download JSCAPE MFT Gateway